HttpClient class is used in order to do HTTP calls. This class comes with overloads such as GetAsync or PostAsync to make it easy to use. Alongside the HttpClient is the HttpResponseMessage class which has a pretty convenient GetStringAsync method.

Therefore, in most projects, this is the kind of code we can see :

private static async Task<List<Model>> BasicCallAsync()

{

using (var client = new HttpClient())

{

var content = await client.GetStringAsync(Url);

return JsonConvert.DeserializeObject<List<Model>>(content);

}

}

This code is pretty simple, efficient, but misses three major points :

- Cancellation support

- Proper error management

- Memory management

Cancellation support

Why support cancellation

When developing application you will want and need to handle cancellations.

The first and obvious case is for timeouts. You do not want your network call to last forever or for too long. To avoid any unwanted behaviour, it is necessary to add custom timeouts for network calls.

The second case is simply cancelling an unfinished call that became unnecessary. For example, when the user quits or closes the screen that initiated this call.

Adding cancellations

In .NET the CancellationToken and CancellationTokenSource are used for that.

In the previous code, there is no way to use a CancellationTokenwithin the GetStringAsync method.

Instead we use to following snippet and the CancellationTokeninstance to the method.

private static async Task<List<Model>> CancellableCallAsync(CancellationToken cancellationToken)

{

using (var client = new HttpClient())

using (var request = new HttpRequestMessage(HttpMethod.Get, Url))

using (var response = await client.SendAsync(request, cancellationToken))

{

var content = await response.Content.ReadAsStringAsync();

return JsonConvert.DeserializeObject<List<Model>>(content);

}

}

Error management

Basic error management

Network is probably the biggest source of failures. As application developers, it is our job to handle these errors and show them in a comprehensible way to our users.

When I review code, this is one of the first things that I will look for. If I am lucky, I find code where there is a form of error handling thanks to the EnsureSuccessStatusCode method.

private static async Task<List<Model>> CheckNetworkErrorCallAsync(CancellationToken cancellationToken)

{

using (var client = new HttpClient())

using (var request = new HttpRequestMessage(HttpMethod.Get, Url))

using (var response = await client.SendAsync(request, cancellationToken))

{

response.EnsureSuccessStatusCode();

var content = await response.Content.ReadAsStringAsync();

return JsonConvert.DeserializeObject<List<Model>>(content);

}

}

This code throws an HttpRequestException exception whenever the HttpStatusCode represents an error.

Let’s now consider the following HTTP Response :

HTTP/1.1 401 Unauthorized

Content-Type: application/json; charset=utf-8

Content-Encoding: gzip

Account locked

When this response is received, an exception is thrown. This exception does not contain anything from the body of the response. Therefore, when the caller of the method catches it, it will have no piece of information regarding exactly what went wrong and will not be able to show the user that his account is locked.

Custom exception

In order to have more information about what happens, we need a custom exception. It always differs on each project but I consider it a best practice to do so for network calls.

The previous code can be altered to look like the following :

public class ApiException : Exception

{

public int StatusCode { get; set; }

public string Content { get; set; }

}

private static async Task<List<Model>> CustomExceptionCallAsync(CancellationToken cancellationToken)

{

using (var client = new HttpClient())

using (var request = new HttpRequestMessage(HttpMethod.Get, Url))

using (var response = await client.SendAsync(request, cancellationToken))

{

var content = await response.Content.ReadAsStringAsync();

if (response.IsSuccessStatusCode == false)

{

throw new ApiException

{

StatusCode = (int)response.StatusCode,

Content = content

};

}

return JsonConvert.DeserializeObject<List<Model>>(content);

}

}

Memory management

Start using streams

All the previous samples have something in common. They first dump the content of the HTTP response in a string and then deserialize it into an object graph. For big JSON files it’s a total waste of memory. In .NET we have amazing little things called streams. And Json.NET knows how to handle them. With streams we can work with the data without having to first dump it into a string.

Let’s create a method to deserialize Json from a stream :

private static T DeserializeJsonFromStream<T>(Stream stream)

{

if (stream == null || stream.CanRead == false)

return default(T);

using (var sr = new StreamReader(stream))

using (var jtr = new JsonTextReader(sr))

{

var js = new JsonSerializer();

var searchResult = js.Deserialize<T>(jtr);

return searchResult;

}

}

In case of error, we want to get the error string so we need to dump the body into a string. So let’s create a method just for that :

private static async Task<string> StreamToStringAsync(Stream stream)

{

string content = null;

if (stream != null)

using (var sr = new StreamReader(stream))

content = await sr.ReadToEndAsync();

return content;

}

The network call method now looks like this :

private static async Task<List<Model>> DeserializeFromStreamCallAsync(CancellationToken cancellationToken)

{

using (var client = new HttpClient())

using (var request = new HttpRequestMessage(HttpMethod.Get, Url))

using (var response = await client.SendAsync(request, cancellationToken))

{

var stream = await response.Content.ReadAsStreamAsync();

if (response.IsSuccessStatusCode)

return DeserializeJsonFromStream<List<Model>>(stream);

var content = await StreamToStringAsync(stream);

throw new ApiException

{

StatusCode = (int)response.StatusCode,

Content = content

};

}

}

Using stream features

In the previous code we wait until all data is in memory before deserializing it. But streams’ nice little feature is that we can start working on them even when all of its data has not been received yet.

To enable this feature with the HttpClient we just need to use the HttpCompletionOption.ResponseHeadersRead parameter :

private static async Task<List<Model>> DeserializeOptimizedFromStreamCallAsync(CancellationToken cancellationToken)

{

using (var client = new HttpClient())

using (var request = new HttpRequestMessage(HttpMethod.Get, Url))

using (var response = await client.SendAsync(request, HttpCompletionOption.ResponseHeadersRead, cancellationToken))

{

var stream = await response.Content.ReadAsStreamAsync();

if (response.IsSuccessStatusCode)

return DeserializeJsonFromStream<List<Model>>(stream);

var content = await StreamToStringAsync(stream);

throw new ApiException

{

StatusCode = (int)response.StatusCode,

Content = content

};

}

}

Without changing anything else from previous code we just optimized our memory usage and speed.

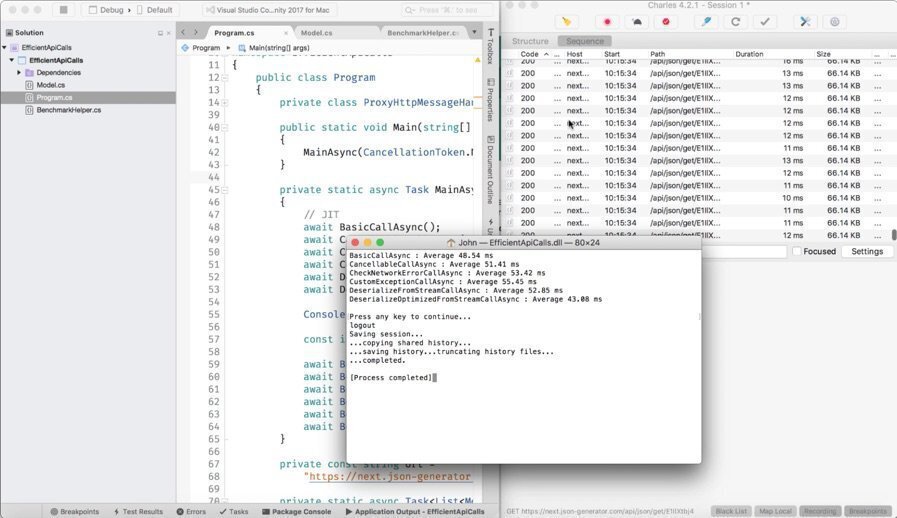

Benchmark

Setting up

For this post I chose to generate a big JSON file with json-generator.com which is great to test performance and memory usage.

I then used the excellent json2csharp tool to generate model classes from the previous JSON.

Results

If you need to be convinced please take a look at the following benchmark video and its associated source code.

I created a simple .NET Core Console Application with each of the previous methods. I called them a hundred times and display the average execution speed for each method.

In order the always have more or less the same network speed for each call I used CharlesProxy which serves a local file instead of going out on the internet.

This enables us to see the overall process without having to worry that network may vary too much making the benchmark useless.

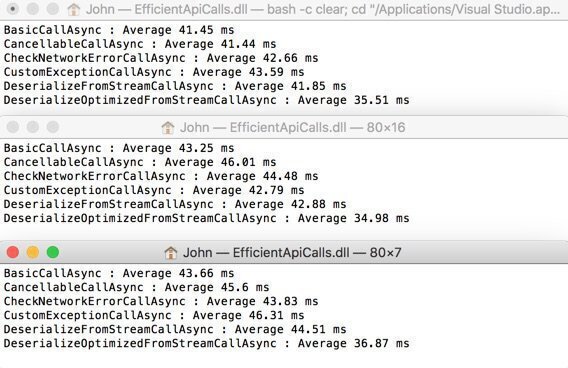

HttpClient reuse

There are always lot of debates regarding the HttpClient reuse thing. Should I use one instance of HttpClient per application or one per call.

As I am a mobile guy doing Xamarin development, I tend to prefer using Dispose whenever it is available. There is, in my experience, no real impact on creating one for each call.

If you happen to have so time feel free to look for these on the web. One thing is sure though is that, on servers, you really should use only one. To know why please read this great post.

For you, HttpClient reuse guys I did 3 runs on the previous benchmark with just one HttpClient instance, and as you can expect, it is faster.

No comments:

Post a Comment